Compare commits

No commits in common. "main" and "master" have entirely different histories.

|

|

@ -1,35 +0,0 @@

|

|||

*.7z filter=lfs diff=lfs merge=lfs -text

|

||||

*.arrow filter=lfs diff=lfs merge=lfs -text

|

||||

*.bin filter=lfs diff=lfs merge=lfs -text

|

||||

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

||||

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

||||

*.ftz filter=lfs diff=lfs merge=lfs -text

|

||||

*.gz filter=lfs diff=lfs merge=lfs -text

|

||||

*.h5 filter=lfs diff=lfs merge=lfs -text

|

||||

*.joblib filter=lfs diff=lfs merge=lfs -text

|

||||

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

||||

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

||||

*.model filter=lfs diff=lfs merge=lfs -text

|

||||

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

||||

*.npy filter=lfs diff=lfs merge=lfs -text

|

||||

*.npz filter=lfs diff=lfs merge=lfs -text

|

||||

*.onnx filter=lfs diff=lfs merge=lfs -text

|

||||

*.ot filter=lfs diff=lfs merge=lfs -text

|

||||

*.parquet filter=lfs diff=lfs merge=lfs -text

|

||||

*.pb filter=lfs diff=lfs merge=lfs -text

|

||||

*.pickle filter=lfs diff=lfs merge=lfs -text

|

||||

*.pkl filter=lfs diff=lfs merge=lfs -text

|

||||

*.pt filter=lfs diff=lfs merge=lfs -text

|

||||

*.pth filter=lfs diff=lfs merge=lfs -text

|

||||

*.rar filter=lfs diff=lfs merge=lfs -text

|

||||

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

||||

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

||||

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

||||

*.tar filter=lfs diff=lfs merge=lfs -text

|

||||

*.tflite filter=lfs diff=lfs merge=lfs -text

|

||||

*.tgz filter=lfs diff=lfs merge=lfs -text

|

||||

*.wasm filter=lfs diff=lfs merge=lfs -text

|

||||

*.xz filter=lfs diff=lfs merge=lfs -text

|

||||

*.zip filter=lfs diff=lfs merge=lfs -text

|

||||

*.zst filter=lfs diff=lfs merge=lfs -text

|

||||

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

||||

42

README.md

42

README.md

|

|

@ -47,19 +47,37 @@ We are pleased to announce the release of **Ovis2**, our latest advancement in m

|

|||

| Ovis2-34B | aimv2-1B-patch14-448 | Qwen2.5-32B-Instruct | [Huggingface](https://huggingface.co/AIDC-AI/Ovis2-34B) | - |

|

||||

|

||||

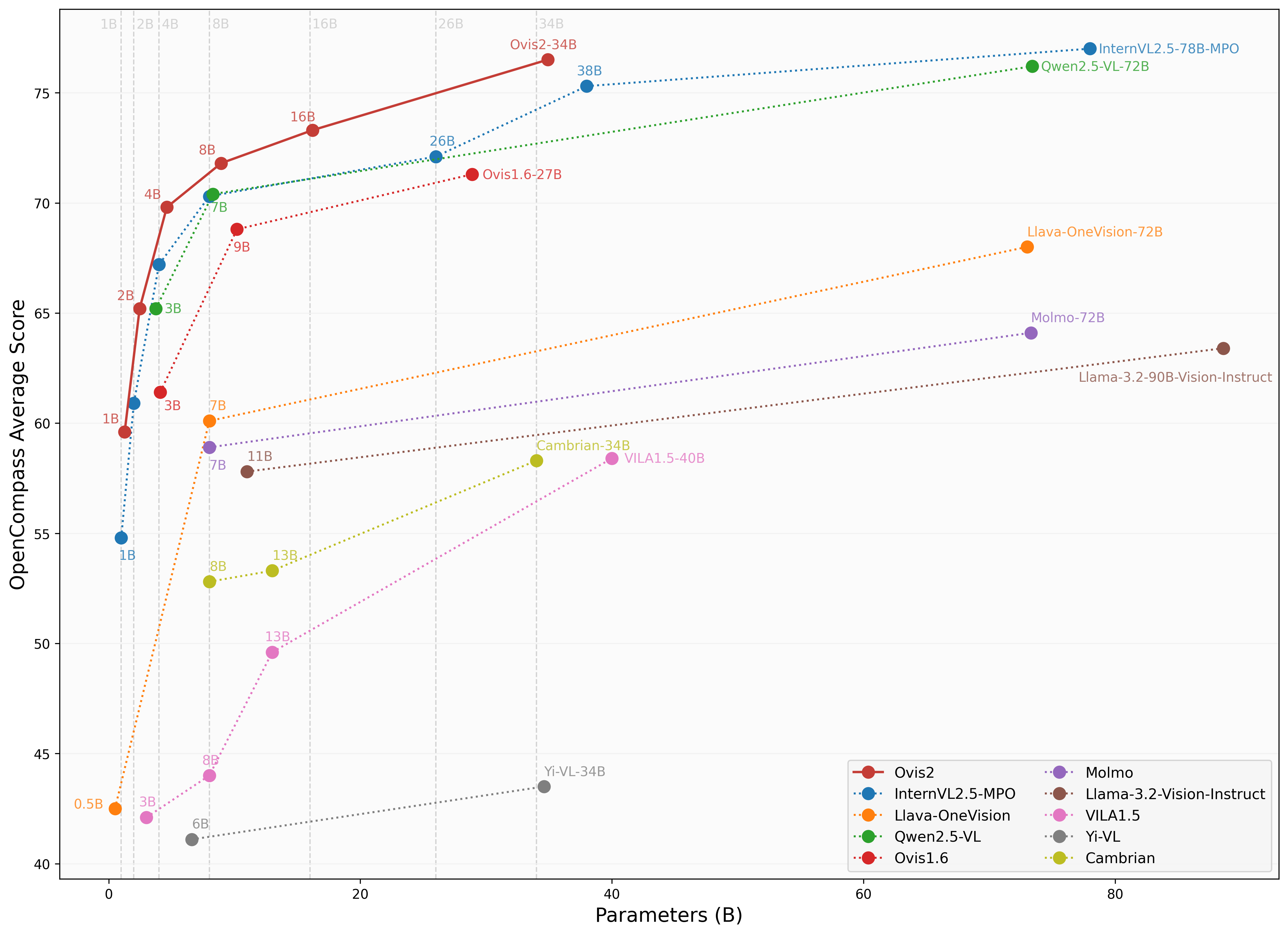

## Performance

|

||||

|

||||

We use [VLMEvalKit](https://github.com/open-compass/VLMEvalKit), as employed in the OpenCompass [multimodal](https://rank.opencompass.org.cn/leaderboard-multimodal) and [reasoning](https://rank.opencompass.org.cn/leaderboard-multimodal-reasoning) leaderboard, to evaluate Ovis2.

|

||||

|

||||

|Benchmark|Ovis2-1B|Ovis2-2B|Ovis2-4B|Ovis2-8B|Ovis2-16B|Ovis2-34B|

|

||||

|:---:|:---:|:---:|:---:|:---:|:---:|:---:|

|

||||

|MMBench-V1.1<sub>test</sub>|68.5|77.2|81.4|83.3|85.2|86.2|

|

||||

|MMStar|52.0|59.0|61.7|64.4|66.9|69.4|

|

||||

|MMMU<sub>val</sub>|36.0|45.3|48.0|59.0|59.6|65.6|

|

||||

|MathVista<sub>testmini</sub>|59.5|64.4|69.1|71.4|74.9|77.0|

|

||||

|HallBench<sub>avg</sub>|44.5|50.2|54.0|56.0|55.9|58.8|

|

||||

|AI2D<sub>test</sub>|76.8|82.6|85.5|86.8|86.1|88.4|

|

||||

|OCRBench|88.7|87.5|91.0|89.3|88.2|89.8|

|

||||

|MMVet|50.3|58.6|65.5|68.5|68.4|75.5|

|

||||

|Average|59.5|65.6|69.5|72.3|73.1|76.3|

|

||||

|

||||

|

||||

### Image Benchmark

|

||||

| Benchmark | Qwen2.5-VL-7B | InternVL2.5-8B-MPO | MiniCPM-o-2.6 | Ovis1.6-9B | InternVL2.5-4B-MPO | Ovis2-4B | Ovis2-8B |

|

||||

|:-----------------------------|:---------------:|:--------------------:|:---------------:|:------------:|:--------------------:|:----------:|:----------:|

|

||||

| MMBench-V1.1<sub>test</sub> | 82.6 | 82.0 | 80.6 | 80.5 | 77.8 | 81.4 | **83.6** |

|

||||

| MMStar | 64.1 | **65.2** | 63.3 | 62.9 | 61 | 61.9 | 64.6 |

|

||||

| MMMU<sub>val</sub> | 56.2 | 54.8 | 50.9 | 55 | 51.8 | 49.0 | **57.4** |

|

||||

| MathVista<sub>testmini</sub> | 65.8 | 67.9 | **73.3** | 67.3 | 64.1 | 69.6 | 71.8 |

|

||||

| HallusionBench | **56.3** | 51.7 | 51.1 | 52.2 | 47.5 | 53.8 | **56.3** |

|

||||

| AI2D | 84.1 | 84.5 | 86.1 | 84.4 | 81.5 | 85.7 | **86.6** |

|

||||

| OCRBench | 87.7 | 88.2 | 88.9 | 83 | 87.9 | **91.1** | 89.1 |

|

||||

| MMVet | 66.6 | **68.1** | 67.2 | 65 | 66 | 65.5 | 65.1 |

|

||||

| MMBench<sub>test</sub> | 83.4 | 83.2 | 83.2 | 82.7 | 79.6 | 83.2 | **84.9** |

|

||||

| MMT-Bench<sub>val</sub> | 62.7 | 62.5 | 62.3 | 64.9 | 61.6 | 65.2 | **66.6** |

|

||||

| RealWorldQA | 68.8 | 71.1 | 68.0 | 70.7 | 64.4 | 71.1 | **72.5** |

|

||||

| BLINK | 56.1 | **56.6** | 53.9 | 48.5 | 50.6 | 53.0 | 54.3 |

|

||||

| QBench | 77.9 | 73.8 | 78.7 | 76.7 | 71.5 | 78.1 | **78.9** |

|

||||

| ABench | 75.6 | 77.0 | **77.5** | 74.4 | 75.9 | **77.5** | 76.4 |

|

||||

| MTVQA | 28.5 | 27.2 | 23.1 | 19.2 | 28 | 29.4 | **29.7** |

|

||||

|

||||

### Video Benchmark

|

||||

| Benchmark | Qwen2.5-VL-7B | InternVL2.5-8B | LLaVA-OV-7B | InternVL2.5-4B | Ovis2-4B | Ovis2-8B |

|

||||

|:--------------------|:-------------:|:--------------:|:------------------:|:--------------:|:---------:|:-------------:|

|

||||

| VideoMME(wo/w-subs) | 65.1/71.6 | 64.2 / 66.9 | 58.2/61.5 | 62.3 / 63.6 | 64.0/66.3 | **68.0/71.6** |

|

||||

| MVBench | 69.6 | **72.0** | 56.7 | 71.6 | 68.45 | 68.15 |

|

||||

| MLVU(M-Avg/G-Avg) | 70.2/- | 68.9/- | 64.7/- | 68.3/- | 70.8/4.23 | **76.4**/4.25 |

|

||||

| MMBench-Video | 1.79 | 1.68 | - | 1.73 | 1.69 | **1.85** |

|

||||

| TempCompass | **71.7** | - | - | - | 67.02 | 69.28 |

|

||||

|

||||

## Usage

|

||||

Below is a code snippet demonstrating how to run Ovis with various input types. For additional usage instructions, including inference wrapper and Gradio UI, please refer to [Ovis GitHub](https://github.com/AIDC-AI/Ovis?tab=readme-ov-file#inference).

|

||||

|

|

|

|||

Binary file not shown.

Binary file not shown.

Loading…

Reference in New Issue