first commit

This commit is contained in:

commit

dc5098d85c

|

|

@ -0,0 +1,33 @@

|

|||

*.7z filter=lfs diff=lfs merge=lfs -text

|

||||

*.arrow filter=lfs diff=lfs merge=lfs -text

|

||||

*.bin filter=lfs diff=lfs merge=lfs -text

|

||||

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

||||

*.ftz filter=lfs diff=lfs merge=lfs -text

|

||||

*.gz filter=lfs diff=lfs merge=lfs -text

|

||||

*.h5 filter=lfs diff=lfs merge=lfs -text

|

||||

*.joblib filter=lfs diff=lfs merge=lfs -text

|

||||

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

||||

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

||||

*.model filter=lfs diff=lfs merge=lfs -text

|

||||

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

||||

*.npy filter=lfs diff=lfs merge=lfs -text

|

||||

*.npz filter=lfs diff=lfs merge=lfs -text

|

||||

*.onnx filter=lfs diff=lfs merge=lfs -text

|

||||

*.ot filter=lfs diff=lfs merge=lfs -text

|

||||

*.parquet filter=lfs diff=lfs merge=lfs -text

|

||||

*.pb filter=lfs diff=lfs merge=lfs -text

|

||||

*.pickle filter=lfs diff=lfs merge=lfs -text

|

||||

*.pkl filter=lfs diff=lfs merge=lfs -text

|

||||

*.pt filter=lfs diff=lfs merge=lfs -text

|

||||

*.pth filter=lfs diff=lfs merge=lfs -text

|

||||

*.rar filter=lfs diff=lfs merge=lfs -text

|

||||

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

||||

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

||||

*.tflite filter=lfs diff=lfs merge=lfs -text

|

||||

*.tgz filter=lfs diff=lfs merge=lfs -text

|

||||

*.wasm filter=lfs diff=lfs merge=lfs -text

|

||||

*.xz filter=lfs diff=lfs merge=lfs -text

|

||||

*.zip filter=lfs diff=lfs merge=lfs -text

|

||||

*.zst filter=lfs diff=lfs merge=lfs -text

|

||||

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

||||

model.safetensors filter=lfs diff=lfs merge=lfs -text

|

||||

|

|

@ -0,0 +1,92 @@

|

|||

---

|

||||

license: apache-2.0

|

||||

tags:

|

||||

- object-detection

|

||||

- vision

|

||||

datasets:

|

||||

- coco

|

||||

widget:

|

||||

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/savanna.jpg

|

||||

example_title: Savanna

|

||||

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/football-match.jpg

|

||||

example_title: Football Match

|

||||

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/airport.jpg

|

||||

example_title: Airport

|

||||

---

|

||||

|

||||

# Conditional DETR model with ResNet-50 backbone

|

||||

|

||||

Conditional DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [Conditional DETR for Fast Training Convergence](https://arxiv.org/abs/2108.06152) by Meng et al. and first released in [this repository](https://github.com/Atten4Vis/ConditionalDETR).

|

||||

|

||||

|

||||

## Model description

|

||||

|

||||

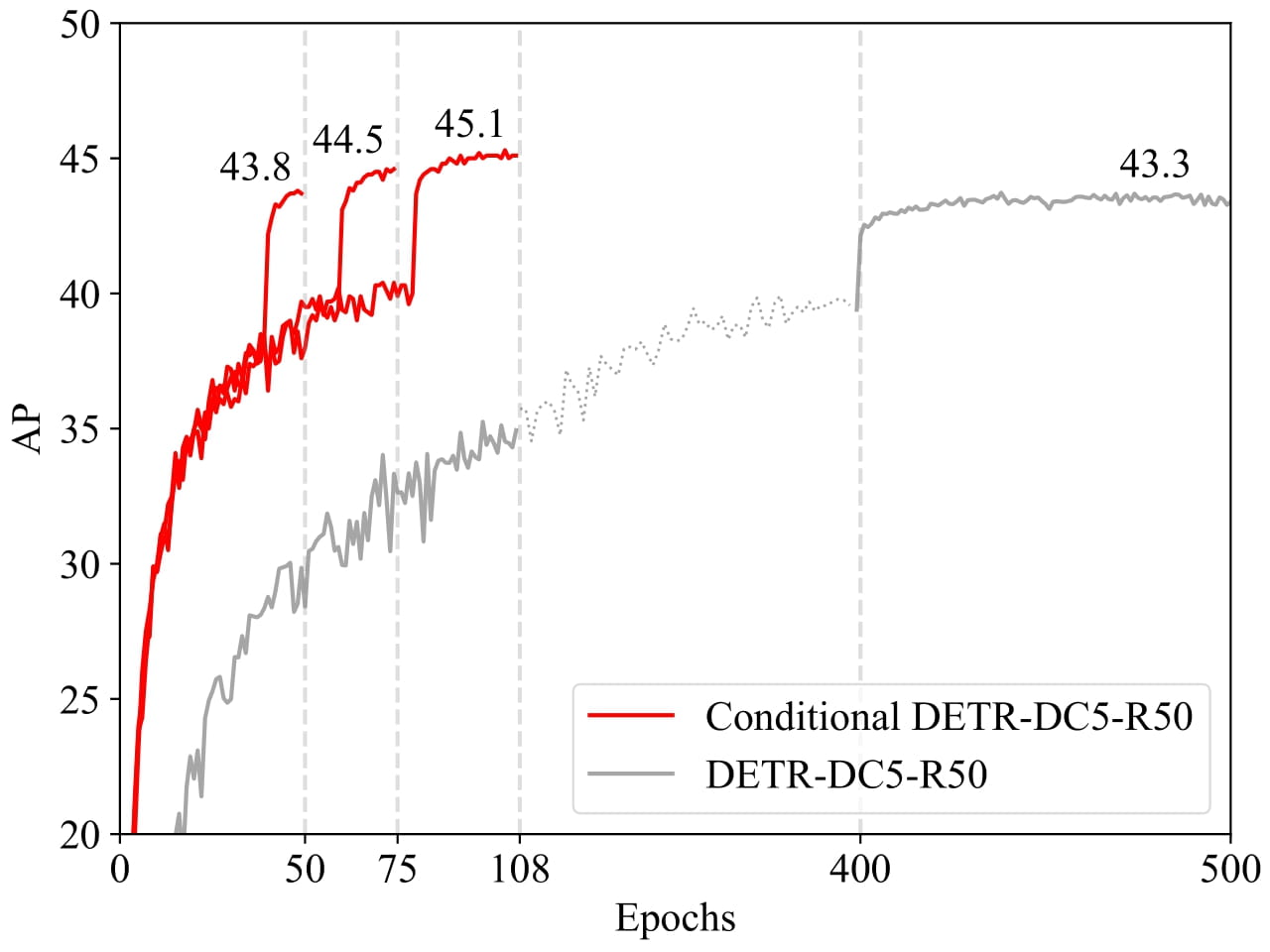

The recently-developed DETR approach applies the transformer encoder and decoder architecture to object detection and achieves promising performance. In this paper, we handle the critical issue, slow training convergence, and present a conditional cross-attention mechanism for fast DETR training. Our approach is motivated by that the cross-attention in DETR relies highly on the content embeddings for localizing the four extremities and predicting the box, which increases the need for high-quality content embeddings and thus the training difficulty. Our approach, named conditional DETR, learns a conditional spatial query from the decoder embedding for decoder multi-head cross-attention. The benefit is that through the conditional spatial query, each cross-attention head is able to attend to a band containing a distinct region, e.g., one object extremity or a region inside the object box. This narrows down the spatial range for localizing the distinct regions for object classification and box regression, thus relaxing the dependence on the content embeddings and easing the training. Empirical results show that conditional DETR converges 6.7× faster for the backbones R50 and R101 and 10× faster for stronger backbones DC5-R50 and DC5-R101.

|

||||

|

||||

|

||||

|

||||

## Intended uses & limitations

|

||||

|

||||

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=microsoft/conditional-detr) to look for all available Conditional DETR models.

|

||||

|

||||

### How to use

|

||||

|

||||

Here is how to use this model:

|

||||

|

||||

```python

|

||||

from transformers import AutoImageProcessor, ConditionalDetrForObjectDetection

|

||||

import torch

|

||||

from PIL import Image

|

||||

import requests

|

||||

|

||||

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

|

||||

image = Image.open(requests.get(url, stream=True).raw)

|

||||

|

||||

processor = AutoImageProcessor.from_pretrained("microsoft/conditional-detr-resnet-50")

|

||||

model = ConditionalDetrForObjectDetection.from_pretrained("microsoft/conditional-detr-resnet-50")

|

||||

|

||||

inputs = processor(images=image, return_tensors="pt")

|

||||

outputs = model(**inputs)

|

||||

|

||||

# convert outputs (bounding boxes and class logits) to COCO API

|

||||

# let's only keep detections with score > 0.7

|

||||

target_sizes = torch.tensor([image.size[::-1]])

|

||||

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

|

||||

|

||||

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

|

||||

box = [round(i, 2) for i in box.tolist()]

|

||||

print(

|

||||

f"Detected {model.config.id2label[label.item()]} with confidence "

|

||||

f"{round(score.item(), 3)} at location {box}"

|

||||

)

|

||||

```

|

||||

This should output:

|

||||

```

|

||||

Detected remote with confidence 0.833 at location [38.31, 72.1, 177.63, 118.45]

|

||||

Detected cat with confidence 0.831 at location [9.2, 51.38, 321.13, 469.0]

|

||||

Detected cat with confidence 0.804 at location [340.3, 16.85, 642.93, 370.95]

|

||||

```

|

||||

|

||||

Currently, both the feature extractor and model support PyTorch.

|

||||

|

||||

## Training data

|

||||

|

||||

The Conditional DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

|

||||

|

||||

### BibTeX entry and citation info

|

||||

|

||||

```bibtex

|

||||

@inproceedings{MengCFZLYS021,

|

||||

author = {Depu Meng and

|

||||

Xiaokang Chen and

|

||||

Zejia Fan and

|

||||

Gang Zeng and

|

||||

Houqiang Li and

|

||||

Yuhui Yuan and

|

||||

Lei Sun and

|

||||

Jingdong Wang},

|

||||

title = {Conditional {DETR} for Fast Training Convergence},

|

||||

booktitle = {2021 {IEEE/CVF} International Conference on Computer Vision, {ICCV}

|

||||

2021, Montreal, QC, Canada, October 10-17, 2021},

|

||||

}

|

||||

```

|

||||

|

|

@ -0,0 +1,217 @@

|

|||

{

|

||||

"activation_dropout": 0.0,

|

||||

"activation_function": "relu",

|

||||

"architectures": [

|

||||

"ConditionalDETRForObjectDetection"

|

||||

],

|

||||

"attention_dropout": 0.0,

|

||||

"auxiliary_loss": false,

|

||||

"backbone": "resnet50",

|

||||

"bbox_cost": 5,

|

||||

"bbox_loss_coefficient": 5,

|

||||

"class_cost": 2,

|

||||

"cls_loss_coefficient": 2,

|

||||

"d_model": 256,

|

||||

"decoder_attention_heads": 8,

|

||||

"decoder_ffn_dim": 2048,

|

||||

"decoder_layerdrop": 0.0,

|

||||

"decoder_layers": 6,

|

||||

"dice_loss_coefficient": 1,

|

||||

"dilation": false,

|

||||

"dropout": 0.1,

|

||||

"encoder_attention_heads": 8,

|

||||

"encoder_ffn_dim": 2048,

|

||||

"encoder_layerdrop": 0.0,

|

||||

"encoder_layers": 6,

|

||||

"focal_alpha": 0.25,

|

||||

"giou_cost": 2,

|

||||

"giou_loss_coefficient": 2,

|

||||

"id2label": {

|

||||

"0": "N/A",

|

||||

"1": "person",

|

||||

"2": "bicycle",

|

||||

"3": "car",

|

||||

"4": "motorcycle",

|

||||

"5": "airplane",

|

||||

"6": "bus",

|

||||

"7": "train",

|

||||

"8": "truck",

|

||||

"9": "boat",

|

||||

"10": "traffic light",

|

||||

"11": "fire hydrant",

|

||||

"12": "N/A",

|

||||

"13": "stop sign",

|

||||

"14": "parking meter",

|

||||

"15": "bench",

|

||||

"16": "bird",

|

||||

"17": "cat",

|

||||

"18": "dog",

|

||||

"19": "horse",

|

||||

"20": "sheep",

|

||||

"21": "cow",

|

||||

"22": "elephant",

|

||||

"23": "bear",

|

||||

"24": "zebra",

|

||||

"25": "giraffe",

|

||||

"26": "N/A",

|

||||

"27": "backpack",

|

||||

"28": "umbrella",

|

||||

"29": "N/A",

|

||||

"30": "N/A",

|

||||

"31": "handbag",

|

||||

"32": "tie",

|

||||

"33": "suitcase",

|

||||

"34": "frisbee",

|

||||

"35": "skis",

|

||||

"36": "snowboard",

|

||||

"37": "sports ball",

|

||||

"38": "kite",

|

||||

"39": "baseball bat",

|

||||

"40": "baseball glove",

|

||||

"41": "skateboard",

|

||||

"42": "surfboard",

|

||||

"43": "tennis racket",

|

||||

"44": "bottle",

|

||||

"45": "N/A",

|

||||

"46": "wine glass",

|

||||

"47": "cup",

|

||||

"48": "fork",

|

||||

"49": "knife",

|

||||

"50": "spoon",

|

||||

"51": "bowl",

|

||||

"52": "banana",

|

||||

"53": "apple",

|

||||

"54": "sandwich",

|

||||

"55": "orange",

|

||||

"56": "broccoli",

|

||||

"57": "carrot",

|

||||

"58": "hot dog",

|

||||

"59": "pizza",

|

||||

"60": "donut",

|

||||

"61": "cake",

|

||||

"62": "chair",

|

||||

"63": "couch",

|

||||

"64": "potted plant",

|

||||

"65": "bed",

|

||||

"66": "N/A",

|

||||

"67": "dining table",

|

||||

"68": "N/A",

|

||||

"69": "N/A",

|

||||

"70": "toilet",

|

||||

"71": "N/A",

|

||||

"72": "tv",

|

||||

"73": "laptop",

|

||||

"74": "mouse",

|

||||

"75": "remote",

|

||||

"76": "keyboard",

|

||||

"77": "cell phone",

|

||||

"78": "microwave",

|

||||

"79": "oven",

|

||||

"80": "toaster",

|

||||

"81": "sink",

|

||||

"82": "refrigerator",

|

||||

"83": "N/A",

|

||||

"84": "book",

|

||||

"85": "clock",

|

||||

"86": "vase",

|

||||

"87": "scissors",

|

||||

"88": "teddy bear",

|

||||

"89": "hair drier",

|

||||

"90": "toothbrush"

|

||||

},

|

||||

"init_std": 0.02,

|

||||

"init_xavier_std": 1.0,

|

||||

"is_encoder_decoder": true,

|

||||

"label2id": {

|

||||

"N/A": 83,

|

||||

"airplane": 5,

|

||||

"apple": 53,

|

||||

"backpack": 27,

|

||||

"banana": 52,

|

||||

"baseball bat": 39,

|

||||

"baseball glove": 40,

|

||||

"bear": 23,

|

||||

"bed": 65,

|

||||

"bench": 15,

|

||||

"bicycle": 2,

|

||||

"bird": 16,

|

||||

"boat": 9,

|

||||

"book": 84,

|

||||

"bottle": 44,

|

||||

"bowl": 51,

|

||||

"broccoli": 56,

|

||||

"bus": 6,

|

||||

"cake": 61,

|

||||

"car": 3,

|

||||

"carrot": 57,

|

||||

"cat": 17,

|

||||

"cell phone": 77,

|

||||

"chair": 62,

|

||||

"clock": 85,

|

||||

"couch": 63,

|

||||

"cow": 21,

|

||||

"cup": 47,

|

||||

"dining table": 67,

|

||||

"dog": 18,

|

||||

"donut": 60,

|

||||

"elephant": 22,

|

||||

"fire hydrant": 11,

|

||||

"fork": 48,

|

||||

"frisbee": 34,

|

||||

"giraffe": 25,

|

||||

"hair drier": 89,

|

||||

"handbag": 31,

|

||||

"horse": 19,

|

||||

"hot dog": 58,

|

||||

"keyboard": 76,

|

||||

"kite": 38,

|

||||

"knife": 49,

|

||||

"laptop": 73,

|

||||

"microwave": 78,

|

||||

"motorcycle": 4,

|

||||

"mouse": 74,

|

||||

"orange": 55,

|

||||

"oven": 79,

|

||||

"parking meter": 14,

|

||||

"person": 1,

|

||||

"pizza": 59,

|

||||

"potted plant": 64,

|

||||

"refrigerator": 82,

|

||||

"remote": 75,

|

||||

"sandwich": 54,

|

||||

"scissors": 87,

|

||||

"sheep": 20,

|

||||

"sink": 81,

|

||||

"skateboard": 41,

|

||||

"skis": 35,

|

||||

"snowboard": 36,

|

||||

"spoon": 50,

|

||||

"sports ball": 37,

|

||||

"stop sign": 13,

|

||||

"suitcase": 33,

|

||||

"surfboard": 42,

|

||||

"teddy bear": 88,

|

||||

"tennis racket": 43,

|

||||

"tie": 32,

|

||||

"toaster": 80,

|

||||

"toilet": 70,

|

||||

"toothbrush": 90,

|

||||

"traffic light": 10,

|

||||

"train": 7,

|

||||

"truck": 8,

|

||||

"tv": 72,

|

||||

"umbrella": 28,

|

||||

"vase": 86,

|

||||

"wine glass": 46,

|

||||

"zebra": 24

|

||||

},

|

||||

"mask_loss_coefficient": 1,

|

||||

"max_position_embeddings": 1024,

|

||||

"model_type": "conditional_detr",

|

||||

"num_hidden_layers": 6,

|

||||

"num_queries": 300,

|

||||

"position_embedding_type": "sine",

|

||||

"scale_embedding": false,

|

||||

"torch_dtype": "float32",

|

||||

"transformers_version": "4.22.0.dev0"

|

||||

}

|

||||

Binary file not shown.

|

|

@ -0,0 +1,17 @@

|

|||

{

|

||||

"do_normalize": true,

|

||||

"do_resize": true,

|

||||

"image_processor_type": "ConditionalDetrImageProcessor",

|

||||

"format": "coco_detection",

|

||||

"image_mean": [

|

||||

0.485,

|

||||

0.456,

|

||||

0.406

|

||||

],

|

||||

"image_std": [

|

||||

0.229,

|

||||

0.224,

|

||||

0.225

|

||||

],

|

||||

"size": {"shortest_edge": 800, "longest_edge": 1333}

|

||||

}

|

||||

Binary file not shown.

Loading…

Reference in New Issue