|

|

||

|---|---|---|

| .gitattributes | ||

| LICENSE | ||

| README.md | ||

| config.json | ||

| configuration.json | ||

| generation_config.json | ||

| merges.txt | ||

| model-00001-of-00004.safetensors | ||

| model-00002-of-00004.safetensors | ||

| model-00003-of-00004.safetensors | ||

| model-00004-of-00004.safetensors | ||

| model.safetensors.index.json | ||

| tokenizer.json | ||

| tokenizer_config.json | ||

| vocab.json | ||

README.md

| base_model | language | pipeline_tag | tags | library_name | license | license_link | ||

|---|---|---|---|---|---|---|---|---|

| Qwen/Qwen2.5-Math-7B |

|

text-generation |

|

transformers | apache-2.0 | https://huggingface.co/Qwen/Qwen2.5-Math-7B-Instruct/blob/main/LICENSE |

Qwen2.5-Math-7B-Instruct

[!Warning]

🚨 Qwen2.5-Math mainly supports solving English and Chinese math problems through CoT and TIR. We do not recommend using this series of models for other tasks.

Introduction

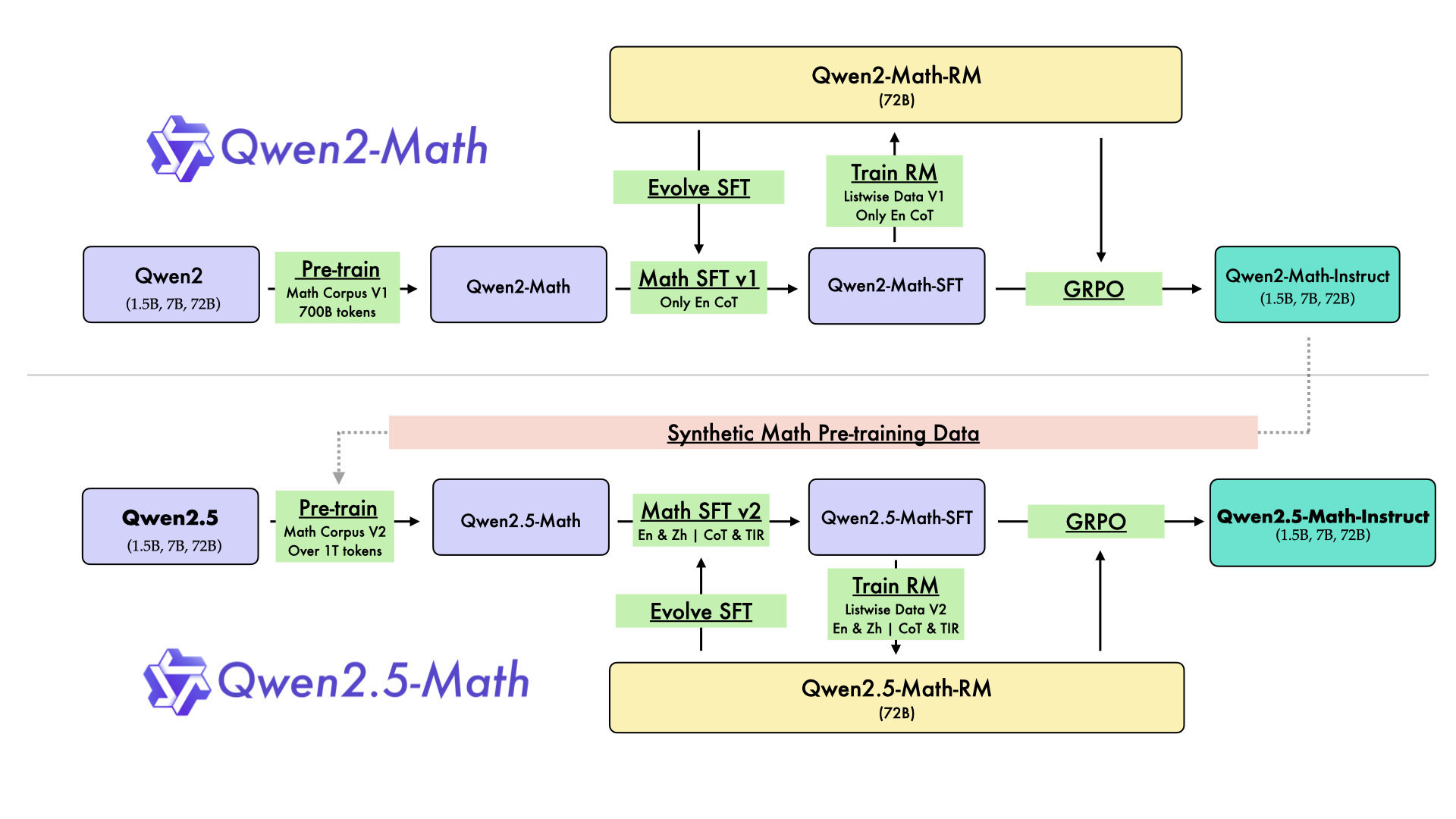

In August 2024, we released the first series of mathematical LLMs - Qwen2-Math - of our Qwen family. A month later, we have upgraded it and open-sourced Qwen2.5-Math series, including base models Qwen2.5-Math-1.5B/7B/72B, instruction-tuned models Qwen2.5-Math-1.5B/7B/72B-Instruct, and mathematical reward model Qwen2.5-Math-RM-72B.

Unlike Qwen2-Math series which only supports using Chain-of-Thught (CoT) to solve English math problems, Qwen2.5-Math series is expanded to support using both CoT and Tool-integrated Reasoning (TIR) to solve math problems in both Chinese and English. The Qwen2.5-Math series models have achieved significant performance improvements compared to the Qwen2-Math series models on the Chinese and English mathematics benchmarks with CoT.

While CoT plays a vital role in enhancing the reasoning capabilities of LLMs, it faces challenges in achieving computational accuracy and handling complex mathematical or algorithmic reasoning tasks, such as finding the roots of a quadratic equation or computing the eigenvalues of a matrix. TIR can further improve the model's proficiency in precise computation, symbolic manipulation, and algorithmic manipulation. Qwen2.5-Math-1.5B/7B/72B-Instruct achieve 79.7, 85.3, and 87.8 respectively on the MATH benchmark using TIR.

Model Details

For more details, please refer to our blog post and GitHub repo.

Requirements

transformers>=4.37.0for Qwen2.5-Math models. The latest version is recommended.

[!Warning]

🚨 This is a must becausetransformersintegrated Qwen2 codes since4.37.0.

For requirements on GPU memory and the respective throughput, see similar results of Qwen2 here.

Quick Start

[!Important]

Qwen2.5-Math-7B-Instruct is an instruction model for chatting;

Qwen2.5-Math-7B is a base model typically used for completion and few-shot inference, serving as a better starting point for fine-tuning.

🤗 Hugging Face Transformers

Qwen2.5-Math can be deployed and infered in the same way as Qwen2.5. Here we show a code snippet to show you how to use the chat model with transformers:

from modelscope import AutoModelForCausalLM, AutoTokenizer

model_name = "qwen/Qwen2.5-Math-7B-Instruct"

device = "cuda" # the device to load the model onto

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

prompt = "Find the value of $x$ that satisfies the equation $4x+5 = 6x+7$."

messages = [

{"role": "system", "content": "You are Qwen, created by Alibaba Cloud. You are a helpful assistant."},

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=512

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

🤖 ModelScope

We strongly advise users especially those in mainland China to use ModelScope. snapshot_download can help you solve issues concerning downloading checkpoints.

Citation

If you find our work helpful, feel free to give us a citation.

@article{yang2024qwen2,

title={Qwen2 technical report},

author={Yang, An and Yang, Baosong and Hui, Binyuan and Zheng, Bo and Yu, Bowen and Zhou, Chang and Li, Chengpeng and Li, Chengyuan and Liu, Dayiheng and Huang, Fei and others},

journal={arXiv preprint arXiv:2407.10671},

year={2024}

}